Secure, Audit-Ready AI Agent Orchestrator for Your Enterprise

Enterprise-Ready, Globally CompliantCheck out Demo

Check out the Enterprise Grade AI Agent Powered by Gen AI. No Setup Fees.

Emerging Generative AI Tools: Alternatives to Microsoft Copilot

ChatGPT, Microsoft Copilot & more these powerful generative AI models have transformed how people write, think, and work. Tools like ChatGPT Enterprise now offer enterprise grade performance, security, and compliance. But when it comes to scaling AI across an entire organization, the conversation shifts from capability to cost, integration, governance, and control.

That’s where Microsoft Copilot often enters the picture and with good reason. Copilot is an excellent product, tightly woven into the Microsoft 365 ecosystem and backed by Azure hosted GPT models. It enables seamless AI experiences across Word, Excel, Outlook, and Teams making it a natural choice for Microsoft centric enterprises.

So why are companies still actively exploring alternatives?

Because when rolled out enterprise wide, Copilot’s seat-based pricing quickly becomes expensive. Licenses are limited, adoption is uneven and extending the same capabilities across non-Microsoft environments like CRMs, project tools, or customer portals isn’t always feasible. What enterprises are really asking is:

Can we get a Copilot like experience powered by the same world class models but with better economics, broader reach, and stronger governance?

Copilot Alternatives: The Expanding Generative AI Toolset Across Enterprises

Enterprises looking for a Microsoft Copilot alternative today have an expanding field of options. These tools serve a wide variety of business needs from research and summarization to policy drafting, customer support, and technical development. The following table outlines leading Copilot alternatives, their strengths, and ideal use cases:

| Copilot Alternative | Key Strengths | Common Use Cases |

|---|---|---|

| Google Gemini | Web grounded, integrated with Google Workspace | Research, summarization, content ideation |

| Claude (Anthropic) | Long form memory, structured reasoning | Legal drafting, policy writing, internal Q&A |

| ChatGPT (OpenAI) | Plugins, rapid iteration, wide familiarity | Marketing, product, dev workflows |

| Perplexity AI | Real time web search with citations | Analyst teams, research, synthesis |

| IBM watsonx | access to on prem models specialized for certain verticals | Support automation, internal workflows |

| Amazon Bedrock / SageMaker | Model hosting, private fine tuning, customization | Backend LLMs, proprietary app integration |

| Open source LLMs (Mistral, LLaMA, Falcon, etc.) | Control, privacy, flexibility in deployment | Experimental workflows, internal copilots |

| Vertical Copilots (e.g., Salesforce Einstein, SAP Joule) | Deep tool specific context (e.g., Salesforce, SAP) | CRM, HR, ERP systems |

Each tool brings its own advantages. Some are built for depth (like Claude), others for reach and ecosystem integration (like Gemini). While Copilot shines in Microsoft based environments, teams often find better task alignment elsewhere depending on role and use case.

Enterprises that combine these models intelligently matching AI capabilities with business function can maximize efficiency and reduce reliance on a single vendor.

Why Different Teams Choose Different Copilot Alternatives

No single Generative AI model fits every workflow. Teams naturally choose tools based on tone, reasoning depth, speed, and how well the tool integrates with their environment.

Some tools offer concise responses; others excel at nuanced reasoning. Preferences vary some teams Favor embedded AI in familiar apps; others need API driven models for custom workflows.

Rather than enforcing one tool across the board, organizations are leaning toward a mix and match approach adopting the best Copilot alternative for each task while maintaining oversight.

Challenges with Generative AI Adoption at Scale

As enterprises explore a wide array of Copilot alternatives, several operational challenges emerge. These issues often stem not from the tools themselves, but from how they’re introduced, adopted, and governed:

-

Fragmented usage patterns Different teams adopt different tools some licensed, others free without coordination.

-

Lack of centralized visibility There’s no unified way to monitor usage across departments or assess how models are being applied.

-

Inconsistent integration experiences Tools may or may not integrate with key platforms like email, CRM, or portals.

-

Varying reasoning styles and response quality Some models generate concise answers, others emphasize depth creating uneven results.

-

No policy enforcement layer Most organizations lack the ability to enforce redaction, logging, or retention across tools.

-

Shadow AI risks When users experiment with unapproved tools, the risk of data leakage rises.

-

Compliance complexity Meeting audit requirements becomes difficult when Generative AI use isn’t logged or governed consistently.

These challenges highlight the need for structured orchestration where flexibility is balanced by control, and teams can use the right model without compromising oversight.

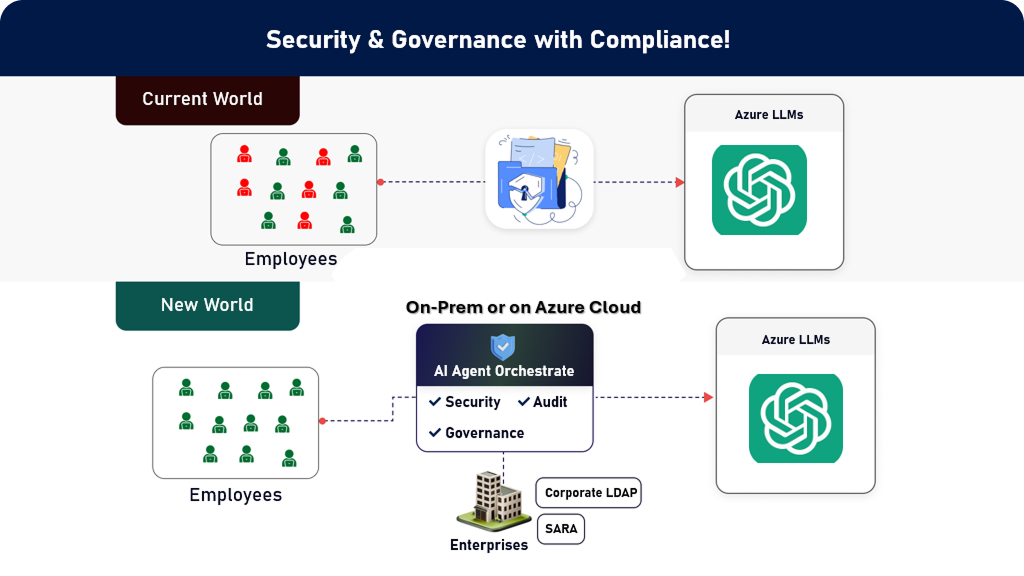

What if you could give your employees access to all these enterprise grade models but in a secure & compliant manner? With optimized pricing?

Microsoft Is a Great Option but You Deserve All Options

Introducing a Secure, Audit Ready Microsoft Copilot Alternative

If you’re exploring a secure, compliant, and audit ready alternative to Microsoft Copilot, it’s important to go beyond outputs and focus on infrastructure. Most tools offer quality generation but few offer enterprise level visibility and governance.

-

This is where the LLM Orchestrator enters: a single interface to secure access to all LLMs such as IBM watsonx, Google Gemini, Amazon Bedrock, Cohere or more, including GPT on Microsoft Azure through a governed interface.

-

It doesn’t replace Microsoft Copilot. It includes Microsoft’s GPT models hosted on Azure, alongside other leading LLMs like Claude, Gemini, and watsonx. The key difference? You control how, where, and when these models are used through a secure, audit ready orchestration layer that optimizes cost, compliance, and performance across the board.

Core features of the LLM orchestrator include:

-

Secure access to models like ChatGPT, Claude, Gemini, and others

-

Route prompts based on model fit, department, or task sensitivity

-

Apply redaction and role-based controls in real time

-

Maintain logs of all prompt and response activity

-

Enforce policy centrally regardless of which model is used

This unified platform gives teams the flexibility to work with the right tool for the job while IT and legal teams retain oversight. It’s a practical step for any enterprise evaluating a Microsoft Copilot alternative with lower cost, stronger control, and better scalability.

Built for Enterprise Compliance from Day One

As Generative AI adoption expands, so do the expectations from auditors, regulators, and internal risk teams. Simply using secure tools isn’t enough organizations must demonstrate that usage is controlled, logged, and aligned with existing compliance frameworks.

-

Align with key regulations like HIPAA, GDPR, SOX, CJIS, and SEC 17a 4

-

Store prompts and outputs immutably to enable traceability

-

Issue real time alerts when sensitive data is entered or generated

-

Offer role-based controls to limit access and enforce redaction where necessary

The LLM Orchestrator incorporates these capabilities by design, helping organizations scale responsibly without adding manual overhead. Teams can continue using AI tools for business automation while IT, legal, and security teams maintain full oversight.

With compliance integrated from day one, enterprises can focus on innovation without worrying about audit readiness.

From Chaos to Clarity: One Dashboard, Total Oversight

Managing Generative AI across departments becomes complex without a unified view. With different teams using different Copilot alternatives, oversight is often fragmented or entirely absent.

-

Live logs of all prompt and response activity across tools

-

Usage tracking by model, team, or individual

-

Real time alerts tied to policy violations or sensitive inputs

-

Audit friendly reports for legal, compliance, and security reviews

-

Cross model transparency that spans both open and commercial LLMs

This visibility transforms Generative AI from a scattered experiment into a governed asset. Instead of reacting to issues, enterprises can proactively guide usage, ensure alignment, and meet internal and external expectations with ease.

Botonomics: Cost Control That Grows with You

As enterprise adoption of Generative AI accelerates, so does the need for financial control and governance at scale. Traditional tools often come with fixed seat-based pricing, siloed access, and limited visibility leading to budget overruns, tool sprawl, and compliance risks.

This is where Botonomics comes into play:

A smarter approach to managing cost, control, and access across your entire AI ecosystem.

The LLM Orchestrator enables:

-

Unified Access to All Leading LLMs Gain secure, policy-controlled access to GPT (via Azure), Claude, Gemini, Amazon Bedrock, watsonx, and open-source models all through a single interface. No silos, no redundant integrations just unified, governed AI access.

-

Token Based, Usage Aligned Pricing Move beyond seat licenses. With token-based pricing, you pay only for what’s used. Route basic tasks to lightweight models and reserve premium models for high value scenarios. This ensures maximum efficiency and cost control without sacrificing capability.

-

Smart Model Routing by Use Case Assign the right model for each workflow based on reasoning depth, privacy requirements, or business function. Legal teams, support agents, analysts all get the best fit AI, without compromise.

-

Real Time Cost and Usage Visibility Monitor consumption by team, model, or task. Analyze usage trends, track spend per token, and generate reports for finance, compliance, and security reviews.

The result?

Copilot like experiences across your organization with lower cost, stronger governance, and complete flexibility.

That’s the power of orchestrated AI. Welcome to intelligent botonomics.

Built for Real Enterprise Environments

A true Copilot alternative must operate within real enterprise environments not just in demos or isolated teams. That means supporting the complex identity, deployment, and integration needs found in large organizations.

The LLM Orchestrator is designed with this in mind:

-

Identity integration Works with SAML, LDAP, and existing IAM systems for secure access control

-

Deployment flexibility Available for cloud, private infrastructure, and hybrid deployments

-

System compatibility Integrates with messaging platforms, internal portals, APIs, and enterprise apps

Whether your enterprise is cloud native or data sensitive, the orchestrator adapts to your setup without forcing platform migrations or heavy lifts.

This ensures AI can be delivered where employees already work, securely and consistently.

Summary: Don’t Stop Generative AI Adoption Make It Safe, Scalable, and Compliant

Adopting Generative AI across the enterprise doesn’t mean committing to a single vendor. In fact, some of the most successful strategies involve using a mix of Copilot alternatives aligned to business needs.

The key isn’t more tools it’s smarter orchestration. With a platform like the LLM Orchestrator, enterprises can:

-

Give users access to the right model for the task

-

Ensure every interaction is logged, secure, and policy compliant

-

Avoid redundancy and shadow usage by consolidating governance

-

Optimize spending through usage-based insights and controls

Generative AI can bring immense value. But to truly scale, it must be governed, trackable, and adaptable to evolving enterprise needs.

With the right approach, enterprises can empower every team to use Generative AI confidently without compromising on security or control.

FAQs

See the Microsoft Copilot Alternative for Full Generative AI Governance

If you’re ready to bring clarity, compliance, and cost efficiency to your Generative AI strategy, now is the time to take the next step. The LLM Orchestrator gives you full visibility and control without slowing down productivity or innovation.

-

Map Your Compliance Requirements See how your needs align with audit ready Generative AI infrastructure

-

Talk to an Enterprise AI Specialist Get personalized guidance for your environment

Don’t pause Generative AI adoption go forward with confidence, policy alignment, and flexibility built in.