Secure, Audit-Ready AI Agent Orchestrator for Your Enterprise

GenAI Is Here — But So Are the Risks

Check out Demo

Generative AI is rapidly transforming workplaces worldwide—but in the race for productivity, many organizations are overlooking a critical threat: compliance failure. With laws such as HIPAA, GDPR, SEC 17a-4, SOX, CJIS and more dictating strict mandates around data privacy, retention, and auditability, enterprises that allow uncontrolled Generative AI usage are risking multimillion-dollar penalties and regulatory scrutiny.

Check out the Enterprise Grade AI Agent Powered by Gen AI. No Setup Fees.

According to Gartner, in next five years, 70% of enterprises will integrate Generative AI into everyday workflows, yet only 25% will have the governance frameworks needed to ensure compliant and auditable use. This means organizations are scaling AI before securing it—and that’s a recipe for disaster.

CIOs and CISOs face a grim paradox: powerful AI tools like ChatGPT and Claude increase team velocity but also enable unsanctioned data sharing. These tools often lack:

Usage logs or traceability of prompts and responses

Retention or redaction controls for sensitive data

Role-based access or governance policies

Visibility into model outputs that could trigger compliance flags

Violations are no longer theoretical

-

GDPR can impose fines of €20 million or 4% of annual global turnover, whichever is higher

-

HIPAA breaches can cost up to $1.5 million per violation category per year

-

SEC 17a-4 mandates WORM-compliant logs of all business communications

-

SOX and CJIS require immutable, role-controlled audit trails

Without proper oversight, Generative AI becomes a shadow channel that leaks IP, violates privacy

laws, and erodes enterprise trust. The most alarming part? There are no logs.

Once submitted,

LLM prompts and responses are unrecorded and unrecoverable—leaving IT, legal, and compliance

teams blind.

This is not just a technical shortcoming—it’s a regulatory hazard. And in

verticals like

finance, healthcare, and public safety, it’s already becoming a reason to halt Generative AI

adoption altogether.

It’s clear: enterprises need more than innovation. They need secure, compliant, and

IT-governed

orchestration for AI tools. This webpage introduces the solution— AI Agent

Orchestrator —designed

to deliver GenAI agility without sacrificing visibility, trust, or control.

Rise in Generative AI Adoption Across Global Workforces

Generative AI is no longer a futuristic concept—it’s now embedded across modern enterprises. Whether sanctioned or not, AI models like ChatGPT, DeepSeek, Claude, and Gemini are already playing critical roles in core workflows across departments.

85%

of enterprise employees

are using GenAI tools for work-related tasks, with or without formal approval (PwC GenAI

Pulse Survey)

60%

of IT executives

report that GenAI is being accessed in their organization without centralized controls

(McKinsey)

By 2027

more than 40%

of AI-related data breaches will be caused by the improper use of generative AI across

borders,” according to Gartner, Inc.

– Gartner Press Release, “Gartner Predicts 40% of AI Data Breaches Will Arise from Cross-Border GenAI Misuse by 2027,” February 17, 2025.

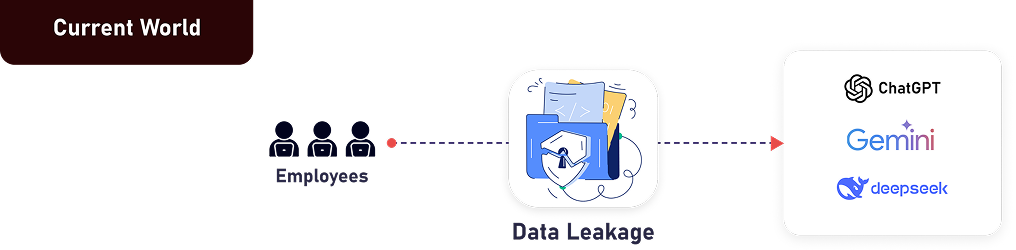

Unmonitored Access Creates Two Blind Spots : Data Leakage and Compliance Gaps

Blind Spot 1: Data

Leakage

Despite GenAI’s transformative

potential, most enterprise usage today remains outside the guardrails of formal IT

governance.

Employees frequently copy and paste sensitive data into public LLMs—without understanding

the implications of data leakage or risks.

-

No redaction or DLP controls on inputs (e.g., PII, PHI, customer financials)

-

Prompts can contain confidential documents, legal case summaries, or client strategies

-

Outputs may be reused in emails, reports, or public presentations—without tracking origin or accuracy

-

The result: inadvertent leaks of proprietary and regulated information into models operated by third-party vendors—where the data may be retained, retrained, or even exposed through future prompts.

Shadow AI

-

Blind Spot 2: Compliance Gaps

Regulatory bodies are ramping up enforcement, and enterprises using GenAI without visibility or controls are entering dangerous territory.

In many organizations, employees unknowingly share proprietary or sensitive information with public AI models. These exchanges are often retained by third-party vendors for extended durations—sometimes beyond 90 days—without any organizational oversight.

Without clear audit logs, control over where data resides, or mechanisms to govern usage, companies face elevated exposure to non-compliance and legal consequences.

-

No logging of GenAI queries or outputs for audit trails

-

Lack of retention or versioning for generated content

-

No enforceable access policies by role, department, or region

-

No alerts or anomaly detection on risky prompts or prohibited topics

This puts organizations at odds with major compliance mandates like:

-

HIPAA (Health data sharing without recordable trail)

-

GDPR (Data processing without user consent or ability to delete)

-

SOX & CJIS (Lack of system logging and auditability)

-

SEC 17a-4 (No WORM-compliant retention of business communications)

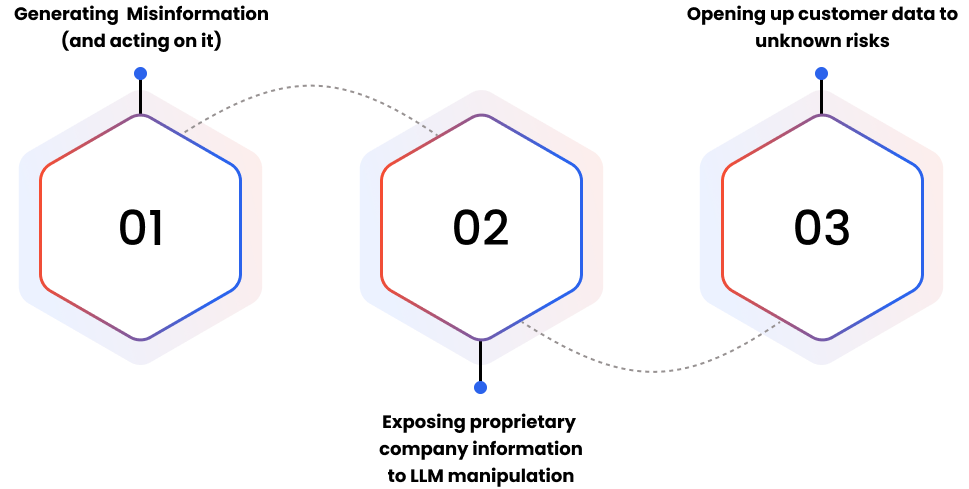

The Challenge

The Cost of Losing Control

What’s Missing in Most Enterprises Today?

-

No central visibility into GenAI prompts or outputs

-

Zero logging, retention, or traceability

-

No role-based access or usage limits

-

Unsustainable seat-based AI licensing

Unmonitored GenAI usage can breach laws, leak IP, and trigger millions in fines. The solution isn’t to restrict Generative AI—it’s to enable it securely with policy, logging, and control.

Introducing AI Agent Orchestrator

Secure, Compliant, and Centralized GenAI Control

Streebo, a leading Digital Transformation & AI Company, has introduced the AI Agent Orchestrator

solution, a secure GenAI access gateway designed specifically for enterprise needs, this

solution consolidates access to multiple LLMs—such as Microsoft Copilot Studio & Enterprise GPT, Google

Gemini, Amazon Bedrock, and IBM watsonx Orchestrate—while enforcing airtight governance,

compliance, and control.

This is not just another GenAI tool—it’s a compliance-first orchestration layer that acts as a

smart, policy-driven firewall between your enterprise data and third-party AI models.

Unlike browser plugins or siloed AI assistants, AI orchestration platform routes all

interactions through a central, auditable, and controlled environment—ensuring no prompt,

response, or user action goes unlogged or unmonitored.

Why It Matters:

-

Stops Regulatory Violations Before They Happen

Apply SEC 17a-4, HIPAA, GDPR, SOX, and CJIS controls in real-time.

-

One Gateway. Any Model. Any Department

Access LLMs across vendors with unified security, no vendor lock-in.

-

IT-Centric Deployment

Designed for IT, legal, and compliance teams to retain full control of Generative AI usage across the org.

-

Works Where You Work

Integrated with enterprise systems, identity providers, and collaboration platforms.

From finance and legal to HR, procurement, and sales—AI Agent Orchestrator gives enterprises a secure path to scale Generative AI across the workforce without creating audit nightmares or compliance black holes.

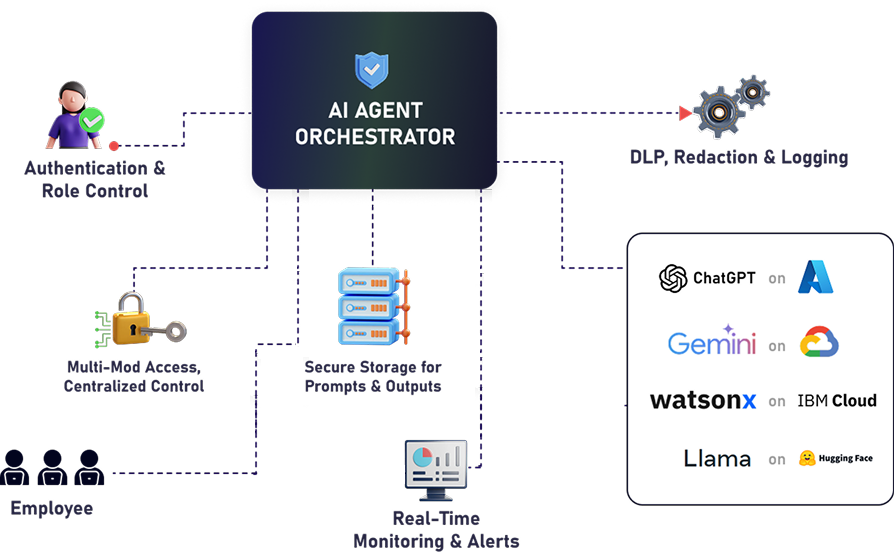

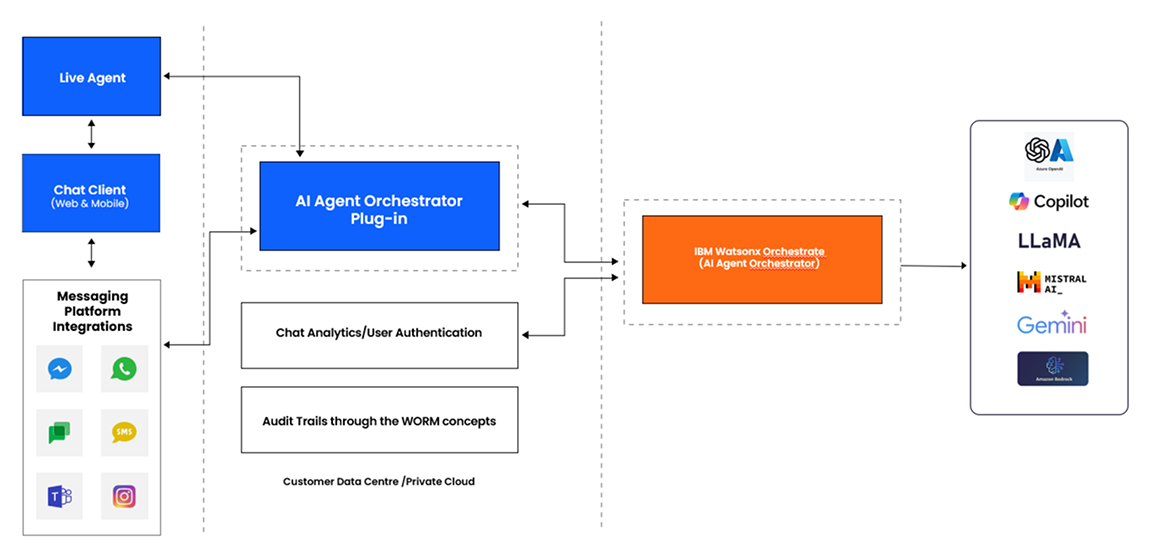

AI Agent Orchestration Architecture

Built for Enterprise Trust & Compliance

The AI orchestration and automation platform is not just a security layer—it is the central

nervous system for all Generative AI activity within the enterprise. Designed with compliance,

auditability, and enterprise scalability in mind, it governs every user interaction with AI

tools through a unified, policy-enforced architecture.

This orchestrator architecture ensures that AI adoption across departments remains safe,

controllable, and fully visible to IT and compliance teams—without disrupting

end-user workflows

or restricting innovation.

Here’s how the architecture works, layer by layer

This architecture ensures every GenAI interaction is secure, observable, and fully governed from end to end—turning potential blind spots into control points.

Key Differentiators of the Agentic AI Orchestration

Enterprise-Ready, Globally Compliant

Generative AI is reshaping productivity, but without proper guardrails, it opens a floodgate of legal and security concerns. The AI Agent Orchestrator has been engineered to bridge this gap—offering not just AI access, but enterprise-class governance, compliance, and observability. Here’s how it stands apart from typical LLM interfaces:

01

Unified Governance Gateway for Enterprise-Wide GenAI Use

Unlike fragmented team-specific implementations, the orchestrator acts as a single entry and exit point for all Generative AI interactions across the organization. It centralizes governance, policies, and visibility—ensuring consistent control regardless of the model or use case.

02

Multi-Model Compatibility Without Lock-In

Organizations can securely route requests across leading foundation models like IBM watsonx Orchestrate, Microsoft Copilot Studio, Azure OpenAI, AWS Bedrock, Google Vertex AI, and open-source models like Mistral, Cohere, and LLaMA—depending on the task, region, or sensitivity. No vendor lock-in. No retooling required.

03

Granular Role-Based Access Control (RBAC) with Full Context Awareness

Access permissions are no longer one-size-fits-all. Enterprises can define rules based on job roles, seniority, geography, department, or project. Authentication integrates with enterprise identity providers like LDAP, Active Directory, and SAML for seamless onboarding and policy enforcement.

04

Immutable Audit Trails Aligned with Global Regulations

All GenAI activity—prompts, responses, redactions, access history—is captured in WORM-compliant, encrypted NoSQL storage. These immutable logs support global mandates like:

-

SEC 17a-4 (U.S.) – Record retention for financial comms

-

HIPAA (U.S.) – Auditability for protected health data

-

GDPR (EU) – Traceability and the right to erasure

-

SOX (Global public companies) – Integrity of financial systems

-

CJIS (U.S.) – Law enforcement data protections

-

PIPEDA (Canada), LGPD (Brazil), PDPA (Singapore), and others

05

Built-In DLP, Redaction & Retention Management

The orchestrator features enterprise-grade Data Loss Prevention (DLP) systems that inspect every prompt and output in real time, scanning for:

-

Personally Identifiable Information (PII)

-

Protected Health Information (PHI)

-

Confidential business data

-

Source code, IP, or legal text

Risky inputs are automatically redacted or blocked, and outputs are retained per organizational retention policies.

06

Consent, Retention, and Deletion Controls

In line with GDPR, HIPAA, and other global privacy mandates, the orchestrator ensures

-

Explicit user consent for AI tool usage

-

Retention schedules based on policy, not vendor defaults

-

Full support for data erasure requests

This prevents unauthorized processing of user or customer data and reduces regulatory exposure.

07

Transparent Usage Journals for Legal & Business Review

Every GenAI interaction is logged with detailed metadata:

-

User identity

-

Prompt and output

-

Model used and response confidence

-

Redaction applied

-

Purpose tag (e.g., HR request, legal summary, code generation)

These journals support not just IT compliance but also legal defensibility, business process audits, and cross-department reviews.

08

Omnichannel Enablement, Unified Control

The orchestrator supports GenAI access from:

-

Browser-based apps

-

Internal portals

-

Slack, MS Teams, and enterprise messaging platforms

-

APIs and DevOps tools

Regardless of how GenAI is used, all activity flows through the same policy, logging, and retention stack—ensuring consistent enterprise-wide compliance.

09

Deployment Flexibility: Public Cloud, Private Cloud, or On-Premise

Whether operating in a highly regulated sector or dealing with national data sovereignty laws, the orchestrator adapts. It can be deployed on:

-

Major public clouds (AWS, Azure, IBM, GCP)

-

Private data centers

-

Hybrid models with on-premise storage and cloud models

You retain complete control over where your data resides and how it is processed.

10

Always Audit-Ready by Design

This isn’t an afterthought—it’s a default. The orchestrator satisfies core requirements for:

-

Immutable logging (for financial and legal records)

-

Retention timelines (industry-specific rules)

-

Data provenance (where outputs came from)

-

Access restriction (per department, use case, or location)

You retain complete control over where your data resides and how it is processed.

The Real Cost Choice for Enterprises

Licenses vs. Orchestration

Option 1

Seat-Based Licensing for Each Platform

To enable GenAI responsibly, an enterprise must ensure

-

Access to multiple LLMs

-

Compliance with data privacy laws (HIPAA, GDPR, SOX, CJIS and more)

-

Full audit trails and access logs

-

Data storage aligned with retention policies

But here’s the problem — individual licensing models are expensive and fragmented:

-

You must purchase separate licenses for each GenAI platform.

-

Then extend those licenses across teams — HR, Legal, Finance, Marketing, and more.

-

Add-on compliance features often cost extra, and even then, no centralized audit or governance is guaranteed.

-

Costs scale per seat, not per use, meaning you’re paying even for inactive users.

This approach can quickly become financially unsustainable while still exposing your organization to regulatory risk due to lack of unified control.

Option 2

AI Agent Orchestrator — Unified Access, Usage-Based Pricing

The AI Agent Orchestrator (LLM Agent Orchestration) takes a completely different approach:

Single, Secure Gateway to All Leading LLMs

-

Users can access IBM watsonx, Azure OpenAI, AWS Bedrock, Google Vertex AI, and others from one interface.

-

No need to juggle individual vendor agreements, tools, or portals.

-

Centralized logging, DLP, access control, and storage — all IT-governed.

Pay Only for What You Use

-

No per-seat licensing—you’re billed on API usage, not number of users.

-

This model lets you pilot, scale, and manage budgets more predictably.

Business Benefits

01Lower Total Cost of Ownership

Eliminate the need for multiple seat-based licenses—pay only for actual usage across all major LLMs via API-based pricing.

02Full Compliance & Governance

Maintain enterprise-grade control with centralized logging, DLP, audit trails, and role-based access aligned to global regulatory standards.

03Scalable, Flexible AI Adoption

Securely extend GenAI across departments with unified access to IBM watsonx Orchestrate, Azure OpenAI, AWS Bedrock, and more—all under IT’s oversight.

Start Now – Scale Securely

For enterprises seeking a ChatGPT alternative or Microsoft Copilot Studio

alternative that aligns with

compliance and budget goals, the AI Agent Orchestrator offers a centralized, secure interface to

multiple GPT alternatives with lower cost. Whether your teams prefer

Google Gemini, DeepSeek, or

other AI tools for business automation, the orchestrator enables seamless

access under IT

governance. This flexibility not only ensures regulatory alignment but also eliminates vendor

lock-in while keeping costs predictable and tied to actual usage.

Unlock the full power of Generative AI without sacrificing compliance, visibility, or budget

control. Deploy the AI Agent Orchestrator today — your enterprise-wide, regulation-ready gateway

to secure multi-LLM access.